While customer surveys can yield amazing insights into what your customers want and need, they can also be a liability if the underlying survey questionnaire is flawed. One of the most common causes of unreliable survey feedback is the biased survey question.

Customer feedback is not easy to come by, which makes every survey response all the more significant. It’s important to make sure the input you collect from respondents is as unbiased as possible, and provides a clear lens into the customer experience. When you have bad survey questions in your questionnaire, you end up wasting a valuable opportunity to surface critical insights from customers and employees about how to improve your products and services.

What are biased survey questions?

A survey question is biased if it is phrased or formatted in a way that skews people towards a certain answer. Survey question bias also occurs if your questions are hard to understand, making it difficult for customers to answer honestly.

Either way, poorly crafted survey questionnaires are a problem as they result in unreliable feedback and a missed opportunity to understand the customer experience.

In previous posts, we’ve given examples of customer satisfaction survey questions and survey design tips to help you craft the perfect customer experience survey. In this post, we’ll help you identify and fix biased survey questions, so that you can avoid inaccurate results due to poor question phrasing.

Here are 7 common examples of biased survey questions, and how to fix them for your customer experience survey.

1. Leading questions

Leading questions sway folks to answer a question one way or another, as opposed to leaving room for objectivity. If you watch legal dramas, you’re likely already familiar with leading questions. After watching his witness get harangued, the lawyer issues an objection for “leading the witness,” or putting words in the witness’s mouth.

In a courtroom setting, leading questions are usually filled with detail and suggest what a witness has experienced, as opposed to letting the witness explain what happened. In the context of a customer survey, you want to let your customers give an accurate account of their experience, instead of dictating how they should view it.

Identifying a leading question

You can usually identify leading questions by looking for subjective adjectives, or context-laden words that frame the question in a positive or negative light.

While a leading question may be a bit more innocuous in your survey situation than it is on the witness stand, it’s still important to avoid a leading question so that you have unbiased survey results. Here are some examples of leading questions, and how to fix them:

- Leading question: How great is our hard-working customer service team?

Fixed: How would you describe your experience with the customer service team? - Leading question: How awesome is the product?

Fixed: How would you rate this product? - Leading question: What problems do you have with the design team?

Fixed: How likely are you to recommend working with the design team?

Each of the examples of biased survey questions listed above contains a judgment, implying that the customer service team is “great” and “hard-working,” that the product is “awesome,” or that you have “problems” with the design team. The corrected phrasing, on the other hand, is more objective, and contains no insinuations.

Leading questions are often unintentional, but if customers perceive your questions as manipulative, your simple leading question could lead to a higher survey drop-off rate, a negative impression of your company, or a severely biased set of responses. Just one of these outcomes can significantly undermine the end result you’re working towards with your customer experience program.

Fixing a leading question

When writing your list of survey questions, phrase your questions objectively, and provide answer scales with equally balanced negative and positive options.

2. Loaded/Assumptive questions

The goal of your survey should be to get an honest response that will offer insight and feedback into the customer experience. A loaded question contains an assumption about a customer’s habits or perceptions. In answering the question, people also inadvertently end up agreeing or disagreeing with an implicit statement.

Consider this question: “Will that be cash or credit?” The assumption in this loaded question is that the customer has already made the decision to purchase. If they answer, they’re implicitly agreeing that they will purchase.

However, if this question came after a customer had already expressed a desire to purchase, it wouldn’t feel loaded at all. With loaded questions in customer satisfaction surveys, it’s usually about context, and whether you’ve properly taken customer data into consideration.

Identifying a loaded question

Checking for loaded questions can be tricky. Make sure you’re reading through the entire survey, since context for the assumption you’re making may come from a previous question, or from your customer database.

Here are some examples of loaded questions:

- Loaded question: Where do you enjoy drinking beer?

Required qualifying information: That the customer drinks beer - Loaded question: How often do you exercise twice a day?

Required qualifying information: That the customer exercises, and that they exercise twice a day

Because these types of questions are often context-based, fixing these mistakes doesn’t always require rephrasing the question. Instead, ensure that your previous survey question or existing customer information qualifies the potentially “loaded” question.

For example, you could ask if your customer exercises twice a day first, and then ask how often they do so. If they don’t actually exercise twice a day, use conditional skip logic so that the customer doesn’t need to answer the irrelevant question.

Another way to avoid a loaded question is by fine-tuning when you send your survey. For instance, if you’d like to know why an ecommerce shopper prefers your web experience to a competitor’s, you wouldn’t ask that question while they’re browsing your site — that’d be too early. You’d pop the question in a web survey on your checkout page, when you know for sure that they’ve chosen to go with you.

Also check the answer options as well as question phrasing. Some loaded questions can be mitigated by providing an “Other” or “I do not ____” answer choice as a way to opt out.

Fixing a loaded question

Don’t make unfounded assumptions about your customers. Make sure you’re qualified to ask the question, skip the question if it’s irrelevant, or provide an answer option that the customer can use to tell you that the scenario isn’t applicable to them to avoid survey question bias.

3. Double-barreled questions

To understand the double-barreled question, just think of a double-barreled shotgun. It shoots from two barrels in one go.

In the realm of biased questions, that double-barreled barrage is really a convoluted question involving multiple issues. By asking two questions in one, you make it difficult for customers to answer either one honestly.

Identifying a double-barreled question

When you proofread your questions, check for “and” or “or.” If you need a conjunction, chances are you’re asking about multiple things.

- Double-barreled product question: Was the product easy to find and did you buy it?

Fixed example (part 1): The store made it easy for me to find the product.

Fixed example (part 2): Did you buy a product from our company during your last visit? - Double-barreled onboarding question: How would you rate the training and onboarding process?

Fixed onboarding example (part 1): How would you rate the training materials?

Fixed onboarding example (part 2): How would you rate the onboarding process?

As you can see in this biased question example, the fix for a double-barreled question is to split it up. Doing this has two benefits: your customers don’t get confused, and you can interpret the results more accurately. After all, in the product example, if a customer had answered “yes,” which question would they be answering? That they found the product easily, or that they purchased it? You would have no way of knowing via the survey results.

The other fix would be to ask only the question that meets your survey goals. Are you looking for feedback on how well your store is laid out? For the onboarding question, would you like feedback on the overall onboarding process, or just one part of it — the training materials?

Fixing a double-barreled question

Ask one question at a time. Don’t overcomplicate. Check out this guide on how to avoid double-barreled questions in your surveys to collect accurate feedback.

4. Jargon

Jargon is a word or phrase that is difficult to understand or not widely used by the general population. For your customer survey questions, keep your language simple and specific. Don’t include slang, catchphrases, clichés, colloquialisms, or any other words that could be misconstrued or offensive.

If you ever need to survey customers in multiple languages or locations, removing jargon will also make your questions easier to translate and more readily understood.

Identifying jargon in your survey question

The worst part about jargon is that you may not even realize you’re using it — those phrases could be embedded in your company culture. To avoid confusing language, try to find people from multiple age groups or demographics who are not familiar with your company to test your survey.

- Product question with jargon: The product helped me meet my OKRs.

Fixed: The product helped me meet my goals. - Service question with jargon: How was face-time with your customer support rep?

Fixed: How would you rate your experience with [team member]?

What are Objectives and Key Results (OKRs)? How do you know your customers use that system to assess their goals? Does face-time refer to the Apple video chat app, or does it mean you just spoke with a customer service team member in person? If a customer needs to spend extra time understanding the question, they may stop taking the survey altogether, or they won’t be able to answer well.

Fixing a question with jargon

Proofread and test your survey with an eye for removing all confusing language. Acronyms are a telltale sign that your survey may contain jargon.

5. Double negatives

While you’re checking your survey questions for jargon, don’t forget about proper grammar. A double negative occurs when you use two negatives in the same sentence. For instance, simplify “Don’t not write clearly” to “Write clearly.”

Avoiding a double negative may seem basic, but when you’re in a rush and trying to get your survey out, it’s easy to miss. It’s also quick to fix if you know what to look for.

Identifying double negatives

Check for double negatives by looking for instances of “no” or “not” paired with the following types of words:

- No/not with “un-” prefix words (also in-, non-, and mis-)

- No/not with negative adverbs (scarcely, barely, or hardly)

- No/not with exceptions (unless + except)

Here are some examples of double-negatives, and how you can edit them out.

- Double-negative: Was the facility not unclean?

Fixed: How would you rate the cleanliness of the facility? - Double-negative: I don’t scarcely buy items online.

Fixed: How often do you buy items online? - Double-negative: The website isn’t easy to use unless I use the search bar.

Fixed: The website made it easy for me to find what I was looking for.

Errors like double negatives are easier to catch if you read your survey questions and answers out loud. Once you fix them, your survey will be easier to understand.

Fixing a double-negative

Two negatives make a positive, in the sense that they cancel each other out. To correct a double negative, rephrase the question using the positive or neutral version of the phrase.

6. Poor answer scale options

We’ve gone over quite a few examples of biased questions, but the question isn’t the only thing that can be biased: your answer options can cause bias, too. In fact, survey answer options are just as important as the questions themselves. If your scales are confusing or unbalanced, you’ll end up with skewed survey results.

In general, carefully consider the best way to ask a question, and then think about the response types that will most effectively allow your audience to offer sincere feedback. Check that your answer options match these criteria as you craft your survey questionnaire.

Identifying mismatched scales and poor answer options

Sync the answer scale back to the question

For example, if you’re asking a question about quality, a binary “yes/no” answer probably won’t be nuanced enough. A rating scale would be more effective.

If you ask “How satisfied” someone is, use the word “satisfied” in your answer scale. If you’re using a smiley face survey, ask your customers how happy they are.

- Mismatched answer scale example: How easy was it to login to the company website? Answer: Yes | No

Fixed answer scale: The login prompt made it easy for me to log in. Answer scale: 1 – Strongly disagree | 2 | 3 | 4 | 5 – Agree

Proofread for mutually exclusive answer options

If you’re using multiple choice answers, proofread the options to make sure they’re logical and don’t overlap. This issue often occurs with frequency and multiple choice questions. For example:

- Frequency question: How often do you check your email in a day?

Overlapping answer options: A. 0-1 time | B. 1-2 times | C. 2-3 times | D. More than 3 times - Multiple choice question: What device do you usually use to check your email?

Overlapping answer options: A. Computer | B. Mobile Phone | C. Tablet | D. iPad

Which option does someone who checks their email once, twice, or three times a day choose? An iPad is a tablet, so which would you select? Always double check your answer options to remove redundancy and make sure the categories are mutually exclusive.

Cover all the likely use cases

For multiple choice questions, ensure your answer choices cover all the likely use cases, or provide an “Other” option. If your customers are constantly choosing “Other,” it’s a sign that you’re not covering your bases.

Double check your survey answer option functionality

If your survey question says you can “Check all the boxes that apply,” make sure people actually can select multiple options.

Use balanced scales

If you’re creating your own scale instead of using a templated agreement or satisfaction scale, make sure the lowest possible sentiment is at the bottom, that the best is at the top, and that the options between are equally spaced out.

- Survey question: How was our service today?

Unbalanced scale: Okay | Good | Fantastic | Unforgettable | Mind-blowing - Survey question: How satisfied were you with our service today?

Balanced scale: Very dissatisfied | Dissatisfied | Neutral | Satisfied | Very Satisfied

What’s the difference between “okay” and “good,” or “unforgettable” and “mind-blowing?” Is “okay” really the worst possible experience someone could have? With a scale like this, it would be impossible to get useful insights.

Be consistent with scale formatting

If you’re using rating scales throughout your survey, be consistent about which end of the scale is positive. For instance, if you’re using 1 to 5 scales to rate agreement or satisfaction, make sure the positive end is always the highest number on the right.

Fixing your answer scales

Read through your questions and answers, and take your survey for a test run. Make sure your answers directly correspond to the questions you’re asking, and that your answer options don’t overlap.

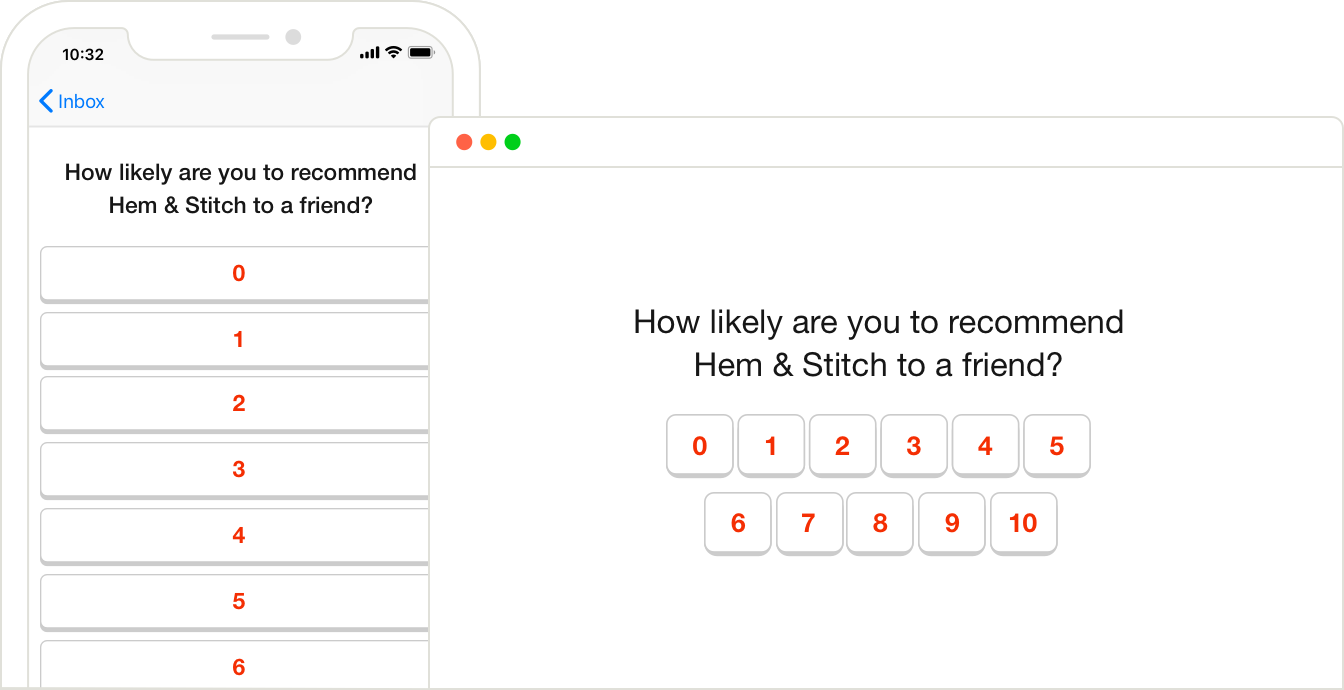

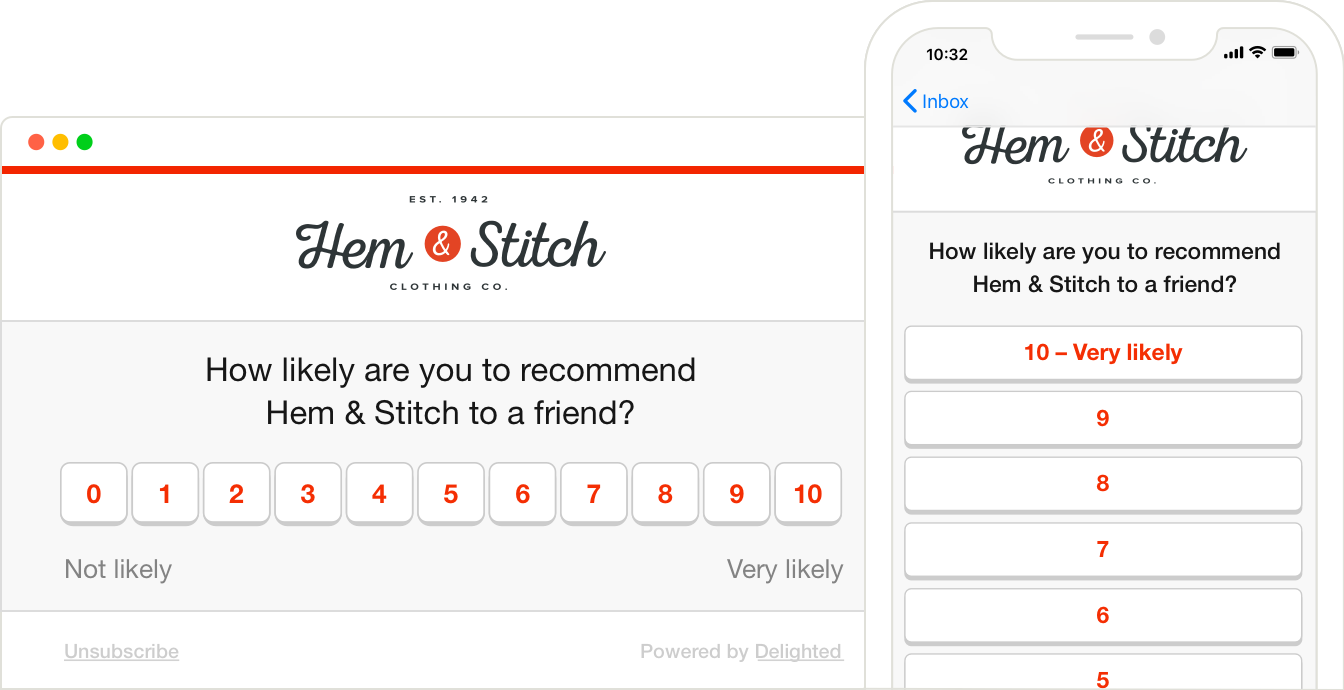

7. Confusing answer scale formatting

There’s a strong likelihood that customers will be responding to your surveys from various devices, whether that’s on desktop, mobile phone, or tablet. Your answer scale formatting needs to take this into account, so the rating options are easy to scan and understand.

Checking for user-friendly formatting

Before you deploy your survey, check how it renders on desktop and mobile for various screen widths. Try to make sure answer scales fit nicely within the frame.

Since people tend to skim, you want to make sure your answer scales are formatted as intuitively as possible. When the rating scale wraps to the next line, customers may mistakenly tend towards choosing “5” because it’s the right-most option, and haven’t scanned to the second line, leading to depressed scores.

For mobile, try to have the positive end of the scale at the top, since people are used to associating a higher score with a top position.

Fixing answer scale formatting

When you design your survey, try to make it responsive to screen width so that the scales always render as you want them to. Note that not all customer experience survey platforms are created equal, as some are more optimized for multiple devices than others.

Delighted surveys are optimized for every device, so customers can respond easily and accurately.

Summary of survey questionnaire best practices

Preventing biased survey questions from slipping into your survey is easy if you know what to look for. Most of the time, it’s about taking a few extra minutes to read through your questions to make sure everything makes sense.

To review, here’s a quick checklist of dos and don’ts for a foolproof survey questionnaire:

- Don’t lead the witness: avoid leading questions

- Don’t make assumptions: avoid loaded questions

- Don’t overload your questions: avoid double-barreled questions

- Don’t use confusing language: avoid jargon

- Do write clearly: avoid double-negatives

- Do check your answers: sync your answer scales to the question

- Do consider the user experience: format your surveys for all devices

It’s worth noting that survey bias can be caused by more than just question and answer phrasing. Learn more in our complete guide to survey bias examples.

For a rundown of the entire customer experience survey process, from setting goals to reporting on results, check out this survey design guide.

Most of the steps for crafting successful surveys involve careful planning and execution, but having a tool that supports the implementation of a successful customer experience survey strategy always helps.

Use this best practice guide as you start building your first survey with Delighted’s free online survey maker. Get started today!