In a previous post on biased survey questions, we went through how bad survey questions (e.g. leading or double-barreled questions) can negatively impact your survey results. In this post, we’ll be diving into the other major cause of misleading survey data: survey bias.

First, let’s define bias as a whole: bias is defined as “an inclination of temperament or outlook.” The concept comes up frequently in sociology and psychology, because it’s associated with prejudice or favoritism. But how does bias show up in surveys?

Survey bias is a “systematic error introduced into sampling or testing by selecting or encouraging one outcome or answer over others.” That “encouragement” towards a specific outcome is what leads to survey bias, where you may only be getting one type of customer perspective.

There are two main buckets of customer survey bias to avoid so that you don’t fall into the trap of basing business decisions off of skewed survey results:

- Selection bias, where the results are skewed a certain way because you’ve only captured feedback from a certain segment of your audience.

- Response bias, where there’s something about how the actual survey questionnaire is constructed that encourages a certain type of answer, leading to measurement error.

Let’s start by looking at three major types of selection bias that can impact your results, namely sampling bias, nonresponse bias, and survivorship bias.

1. Sampling bias: Getting full representation

For any type of survey research, the goal is to get feedback from people who represent the audience you care about — or, in statistical terms, your “sample.” Sampling bias occurs when you only get feedback from a specific portion of your audience, ignoring all others. This often occurs because a customer segment is left out of the survey process, and may not have been invited to take your survey.

A Software as a Service (SaaS) company likely has multiple buyer personas who each interact with the product in a different way — let’s say, the analyst who logs in every day to do tactical work, the manager who checks in on progress while monitoring results, and a director who pays the bills and is on the hook for proving return on investment (ROI). Depending on how you distribute the surveys (how often, and through what channel), you could end up with feedback from only a subset of your customer base, hence, sampling bias.

The same is true in the retail space. If you have customers who purchase from you online and in-person, but you only send email surveys to the online customer base, you’re missing out on feedback from those who purchased in-store. Your results will contain a sampling bias.

Sometimes, filtering out a specific group of individuals from a survey is intentional. For instance, you could be looking for very specific feedback on a new software feature, and need input from your power users. Or, you’re trying to improve your ecommerce experience, so you really only want feedback from those who have gone through the entire online purchasing experience.

If that’s the case, in your survey report, it’s important to caveat your findings by stating which population’s perspective your findings reflect, and how that’s in line with your survey goals.

How to reduce sampling bias

To reduce sampling bias, you may need to take a few steps back and ensure that your survey design process hasn’t left someone out.

Here are a couple steps to follow:

- Get a good grip on what you’d like feedback on, who would be able to provide that feedback, and how you’ll be getting that feedback. Plotting out major customer journey touchpoints for each of your buyer personas is a great way to start.

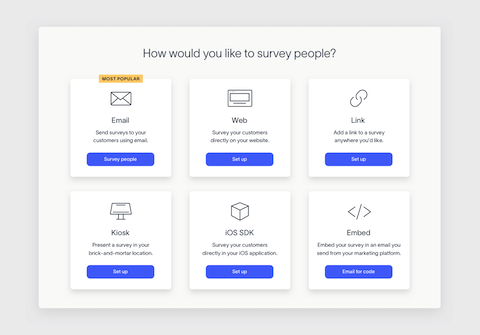

- Ensure that your survey distribution method and survey timing make sense for those customers. Will an email survey or web survey work better? For your power users, an in-app survey is probably a sure bet, while an email survey following a quarterly business presentation might be more actionable for someone at a director or managerial level.

Once you know exactly who will be receiving your survey, and how you’ll send those surveys out, your next task will be to get people to respond.

2. Nonresponse bias: Getting people to respond

Even if you’ve included your entire core audience in your survey, there’s still no guarantee that they’ll answer your survey.

For example, inactive users or people who purchased your product a very long time ago are probably less likely to respond since they don’t have a strong recollection of their experience. They also may not have any helpful feedback to provide. On the other hand, folks who have invested a lot in your business and use your service often are probably more likely to respond, and have higher quality feedback to share.

The important thing to keep in mind is how representative those who did respond are of the audience you care about. If your survey response rate is low, but the voices of all your key stakeholders are there, it may be less important for you to try to increase the number of people who respond to your survey. However, if you take a look at the distribution of responses, and find that only users of a certain product, age group, or gender are responding, try to increase your response rate in those areas to round out your feedback.

Fear of repercussion can also prevent people from responding. Imagine an employee experience survey where you ask about workload or try to get managerial feedback from direct reports. If the organization hasn’t set expectations around anonymity, employees with negative opinions may not respond at all.

How to reduce non-response bias

If you’re not getting the feedback you need, there are multiple ways to increase survey response rates while keeping feedback quality high. Similar to the solution for reducing sampling bias, you could be missing out on feedback simply because your distribution method or survey timing is off.

Here are some of our top tips to try:

- Adjust survey timing to the type of feedback you’d like. For example, ask for customer service feedback immediately after an issue has been resolved.

- Keep your survey short (and let people know it’s short) so they’ll be more likely to take your survey.

- Try different survey distribution methods (email, web, or link), and ensure you’re following best practices for each type of distribution method.

- In the case of more sensitive topics, let folks know that the survey is anonymous, and that there will be no repercussions to their answers.

For a complete list of tactics, check out this guide on increasing survey response rates.

3. Survivorship sampling bias: Getting a second opinion

Survivorship bias occurs when your survey is limited to customers, clients, and employees who have remained with you over time. As you can imagine, their feedback may be very different from the opinion of those who have churned or left your company.

It may not always be possible to reach those lost contacts, but you could gain valuable data by including that sampling in your current survey.

How to reduce survivorship bias

Follow up with churned customers and employees who are going to leave the company with exit surveys. Try to understand their reasons, so you can account for them in the future. Prospect loss surveys, where you ask potential customers why they didn’t become actual customers, are another good source of feedback for growing your business.

Now that we’ve gone through the major types of selection bias that can affect your customer surveys, let’s talk about response bias.

To be clear, “response bias” is not the opposite of “nonresponse bias.” To review, non-response bias focuses on what happens when those who receive your survey choose not to respond. Response bias is about societal or survey constructs that can impact the actual quality of the survey answers.

4. Acquiescence bias: When it’s all about “yes”

While it’s always wonderful to hear you’re awesome, sometimes, you might wonder if it’s true. If all of your survey results come back positive, it could be a result of acquiescence bias, or the “yes-man” phenomenon.

Societal norms and survey fatigue are a couple factors that lead to acquiescence bias. It’s easy to say “yes” out of politeness, even if it’s untrue. If you stack a long or complicated survey on top of that, folks could get tired of giving thoughtful responses, and just default to positive answers to get through the questions.

Note that these norms will vary by country. In some places, people may avoid giving ratings that are too harsh or too positive, and tend towards more average scores all the time.

Luckily, there are some ways to design your questionnaire to avoid acquiescence bias.

How to mitigate acquiescence bias

The best way to minimize the chance of acquiescence bias is to use thoughtfully phrased question and answer scales, so you make it easy for your clients to offer their input without feeling like the answer they want is just not there.

- Vary your questions and answers, and use multiple choice questions in addition to scale questions. Here’s a rundown of the different types of survey questions to use in your survey.

- Don’t use leading questions, which usually dictate what you would like to hear versus how someone might actually feel.

- Avoid close-ended questions that only allow a “yes” or “no” answer, since they don’t provide enough levels of nuance for folks to choose from.

- Use a response scale that does not lend itself as easily to acquiescence bias (for example: “Definitely will not, Probably will not, Don’t know, Probably will, Definitely will”)

- When surveying internationally with templated CES software, CSAT software, or NPS software, check the open-ended feedback against the initial rating to check if cultural norms may be skewing the score.

5. Question order bias: Striving for consistency

Question order bias, also known as order-effects bias, can bias your survey responses through “priming” and the desire people have to give internally consistent answers.

For example, consider these two questions:

- How happy are you in your life overall?

- How happy are you with your marriage?

Studies have shown that when you ask the more specific question about marriage first, it influences how folks answer the more general question about how happy they are overall. More specific questions prime context, and the more general question becomes a summary of how people feel based on previous questions asked. If they answer that they’re happy in their marriage, they’re also more likely to say they’re happy overall due to a desire to remain internally consistent.

This is because people remember how they respond for each question, and want to respond to all of the questions in a consistent way. Question order bias has been found to occur in a wide array of scenarios. You can learn more about examples and the impact of question order bias in this article.

How to limit question order bias

The goal of your survey may impact how you handle question order bias. Here are some methods to try, depending on your situation:

- Test your survey with an eye for priming in mind

- For satisfaction surveys, start with a general question that measures the entire experience before asking specific questions about each part of the experience

- For market research surveys, randomize question order

- Group questions by topic, but randomize the order of the questions within that topic

6. Answer option order/primacy bias: Answer order matters too

The order of your answers for each question also makes a difference in how customers respond to your survey, especially when it comes to multiple choice questions. There are two types of order bias at play: primacy bias and recency bias.

Primacy bias is when people choose from the first few answer options, since they may not have taken the time to read through all the choices. Recency bias is actually when people choose the last answer in the list, since that last option is the “most recent,” and therefore more memorable, answer.

How to avoid answer order bias

First off, keep in mind that answer order bias only applies to multiple choice questions. You wouldn’t want to randomize the answer order of a rating scale question, where the order itself means something. Doing so would be confusing and lead to inaccurate answers.

Here are a few ways to avoid answer option order bias for multiple choice questions:

- Randomize the answer option order

- Limit your answer option list, but include a free response option to capture any choices you may have missed

7. Social desirability/conformity bias: The coolness factor

While acquiescence bias involves answering “yes” or in the affirmative, social desirability bias means that people answer questions in a way that they think will make them look good (or more socially attractive). They are conforming to acceptable norms, which can lead them to exaggerate about their habits, beliefs, and personal preferences.

Specifically, lifestyle choices that are “bad” or “unhealthy” (like drinking, smoking, expressions of negative emotions, or other negative habits) may be misrepresented. Extending out from that general concept, though, a person might avoid racist, sexist, or other intolerant associations by misrepresenting themselves on the survey form. They don’t want to be perceived in a negative way.

Some people may also exaggerate demographic information like income, education, or other social or lifestyle factors, regardless of whether the survey is anonymous.

How to reduce social desirability bias

These types of survey questions typically come up at the end of the customer survey, when you’re trying to learn more about customer demographics and psychographics. Careful question wording and cross-referencing answers for consistency can help you identify and reduce the impact of social desirability bias.

- Allow anonymous responses

- Carefully review how you’ve worded your questions and responses

- Use neutral questions that avoid social desirability bias

- Randomly select questions from the repository of possibilities

- Check responses against what you know about your customers through previous answers or your existing customer data

Summary

Selection and response bias creep into every survey situation, but following survey design best practices can minimize their effect. It may not be possible to remove all forms of bias from every survey, but by carefully focusing on your goal, and using strategies to effectively address the most prevalent survey bias situations, you should be able to get the results you need.

You can also rely on free survey makers like Delighted to provide a complete customer satisfaction survey solution and the resources you need to make survey creation simple, streamlined, and effective.